TL;DR:

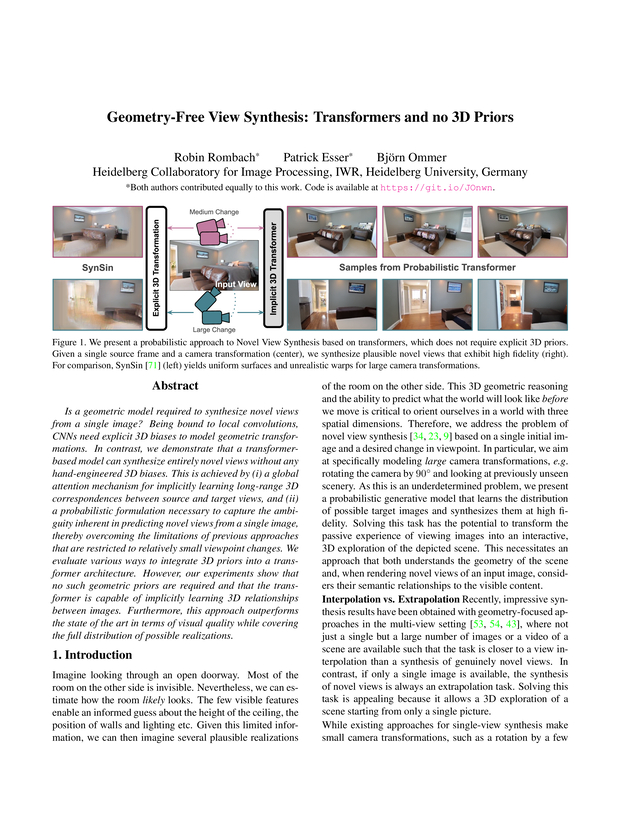

We present a probabilistic approach to Novel View

Synthesis based on transformers, which does not

require explicit 3D priors. Given a single source

frame and a camera transformation (center), we

synthesize plausible novel views that exhibit high

fidelity (right). For comparison,

SynSin (left)

yields uniform surfaces and unrealistic warps for

large camera transformations.

TL;DR:

We present a probabilistic approach to Novel View

Synthesis based on transformers, which does not

require explicit 3D priors. Given a single source

frame and a camera transformation (center), we

synthesize plausible novel views that exhibit high

fidelity (right). For comparison,

SynSin (left)

yields uniform surfaces and unrealistic warps for

large camera transformations.

Abstract

Is a geometric model required to synthesize novel views from a single image? Being bound to local convolutions, CNNs need explicit 3D biases to model geometric transformations. In contrast, we demonstrate that a transformer-based model can synthesize entirely novel views without any hand-engineered 3D biases. This is achieved by (i) a global attention mechanism for implicitly learning long-range 3D correspondences between source and target views, and (ii) a probabilistic formulation necessary to capture the ambiguity inherent in predicting novel views from a single image, thereby overcoming the limitations of previous approaches that are restricted to relatively small viewpoint changes. We evaluate various ways to integrate 3D priors into a transformer architecture. However, our experiments show that no such geometric priors are required and that the transformer is capable of implicitly learning 3D relationships between images. Furthermore, this approach outperforms the state of the art in terms of visual quality while covering the full distribution of possible realizations.

Results

and applications of our model.

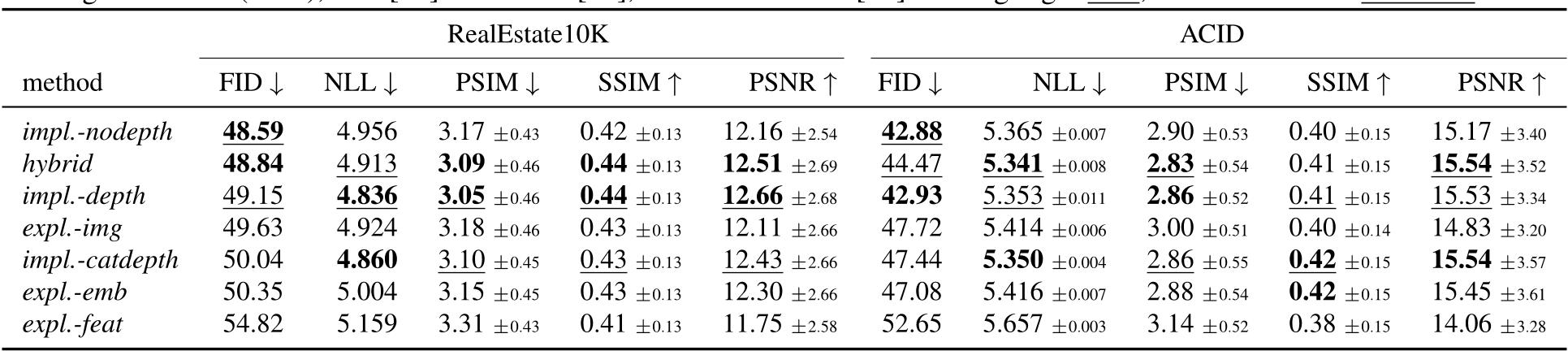

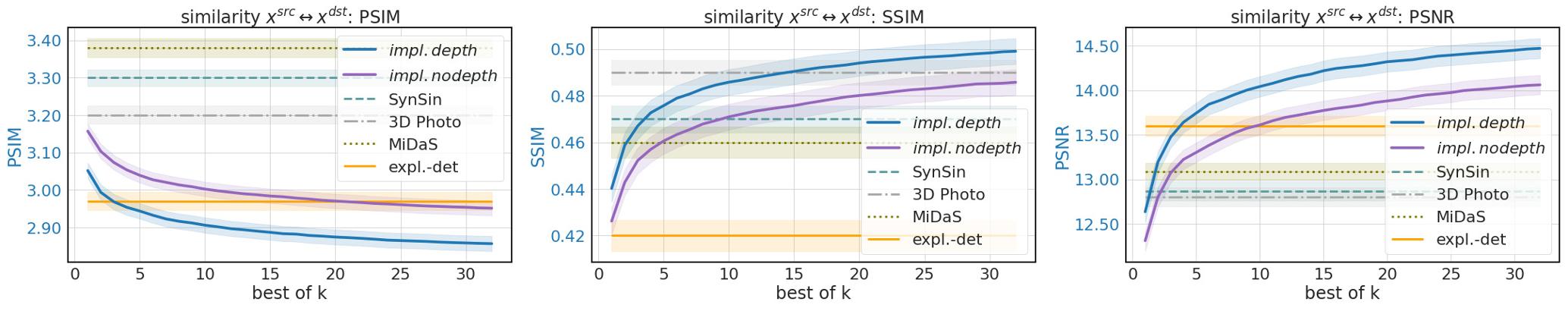

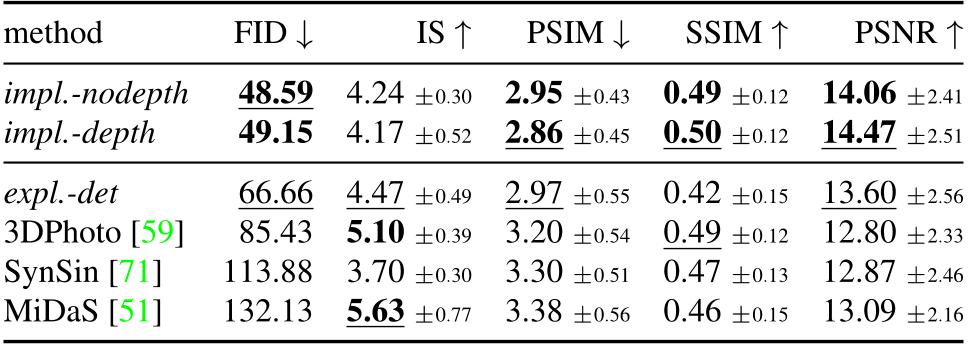

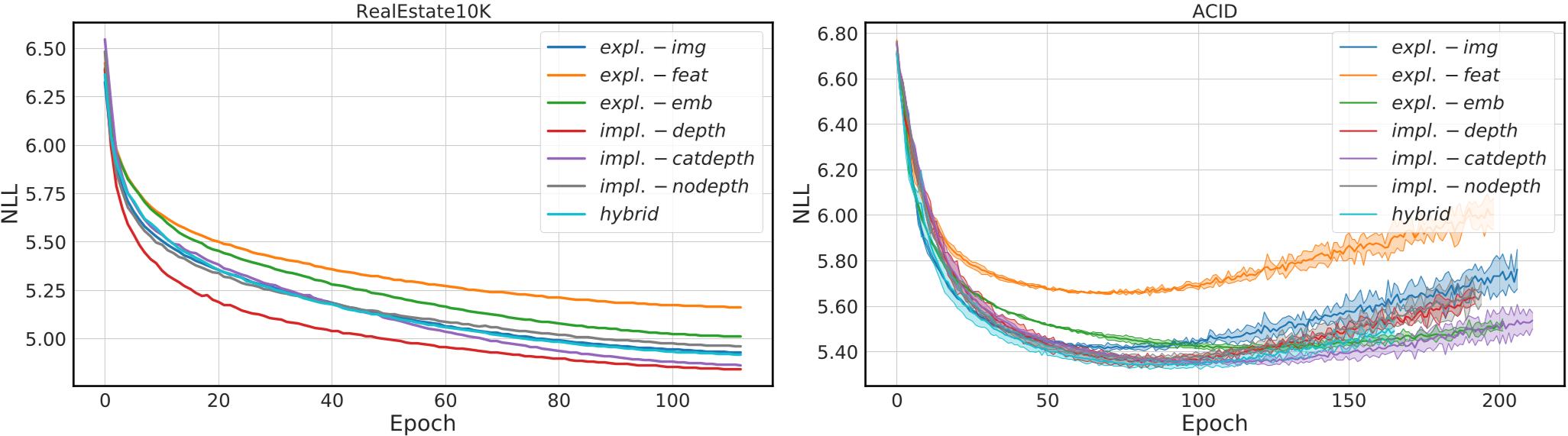

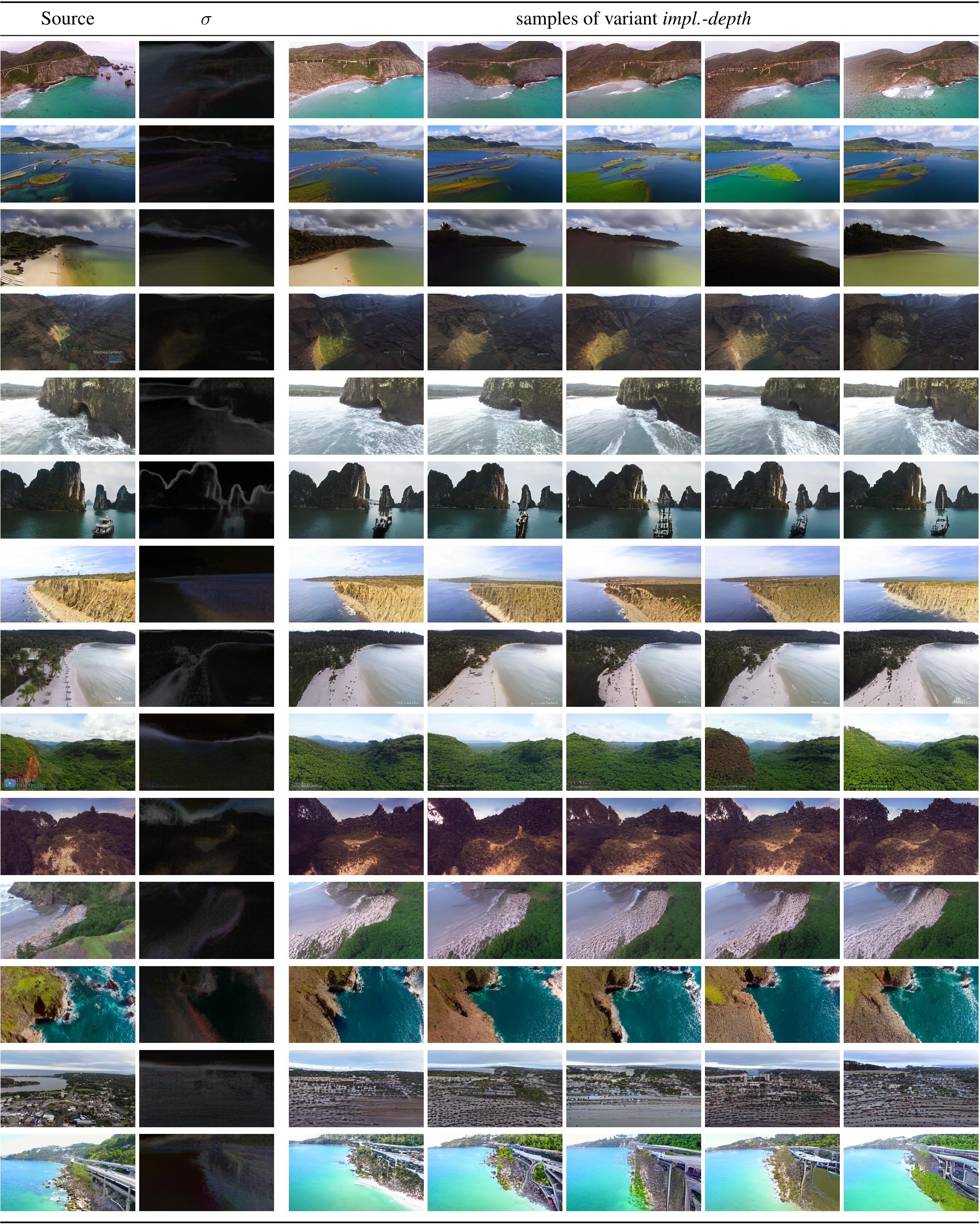

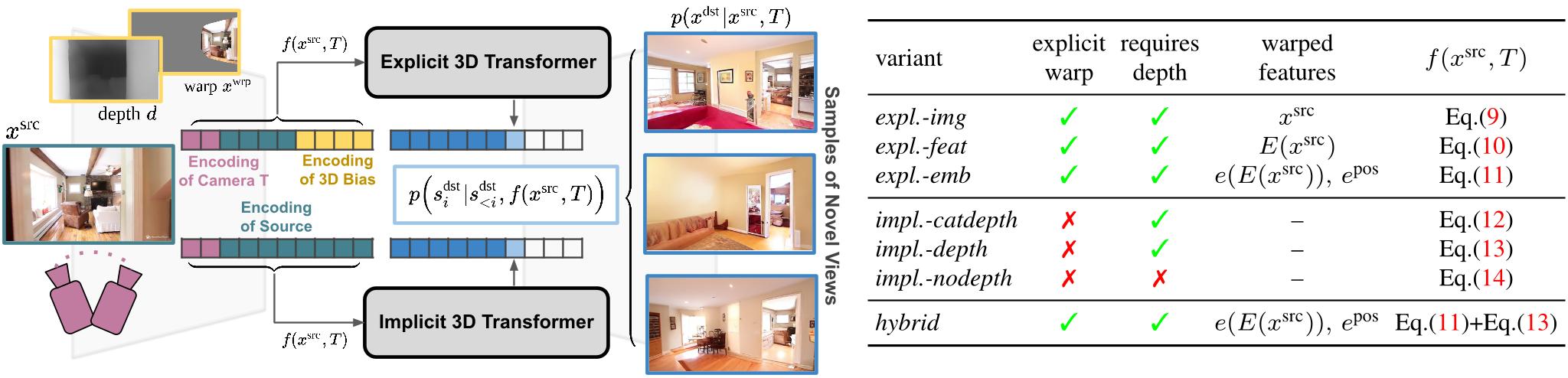

Figure 2. We formulate novel view synthesis as sampling from the distribution p(xdst|xsrc, T ) of target images xdst for a given source image xsrc and camera change T . We use a VQGAN to model this distribution autoregressively with a transformer and introduce a conditioning function f(xsrc, T ) to encode inductive biases into our model. We analyze explicit variants, which estimate scene depth d and warp source features into the novel view, as well as implicit variants without such a warping. The table on the right summarizes the variants for f .

Figure 2. We formulate novel view synthesis as sampling from the distribution p(xdst|xsrc, T ) of target images xdst for a given source image xsrc and camera change T . We use a VQGAN to model this distribution autoregressively with a transformer and introduce a conditioning function f(xsrc, T ) to encode inductive biases into our model. We analyze explicit variants, which estimate scene depth d and warp source features into the novel view, as well as implicit variants without such a warping. The table on the right summarizes the variants for f .

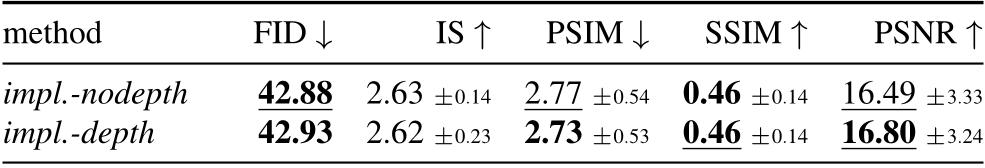

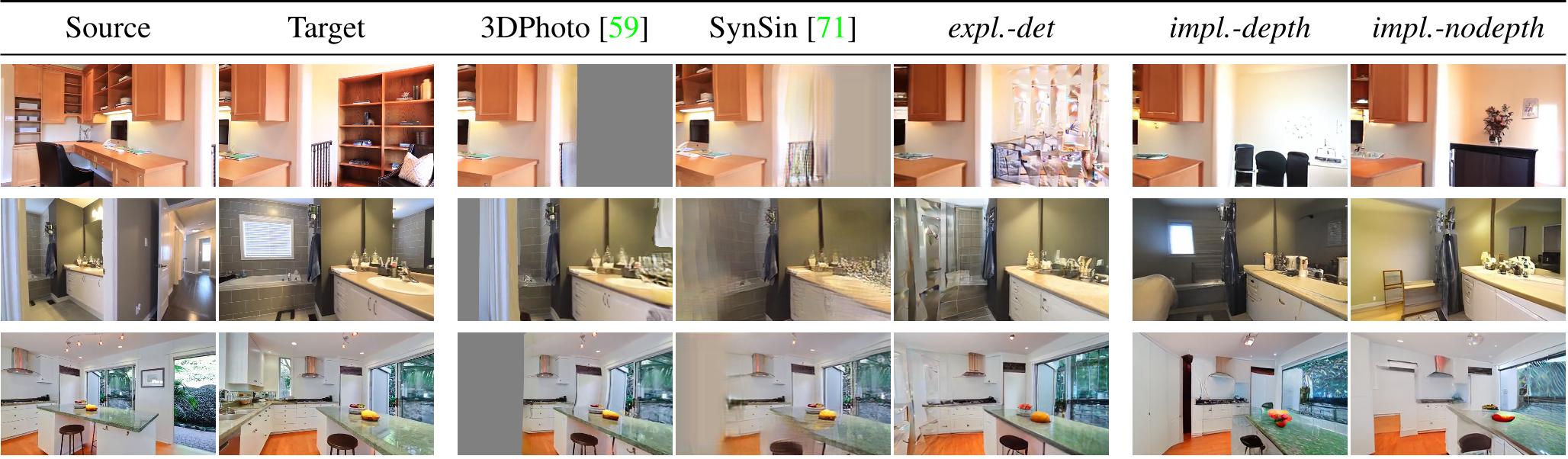

Figure 5. Qualitative Results on RealEstate10K: We compare three deterministic convolutional baselines (3DPhoto [59], SynSin [71], expl.-det) to our implicit variants impl.-depth and impl.-nodepth. Ours is able to synthesize plausible novel views, whereas others produce artifacts or blurred, uniform areas. The depicted target is only one of many possible realizations; we visualize samples in the supplement.

Figure 5. Qualitative Results on RealEstate10K: We compare three deterministic convolutional baselines (3DPhoto [59], SynSin [71], expl.-det) to our implicit variants impl.-depth and impl.-nodepth. Ours is able to synthesize plausible novel views, whereas others produce artifacts or blurred, uniform areas. The depicted target is only one of many possible realizations; we visualize samples in the supplement.

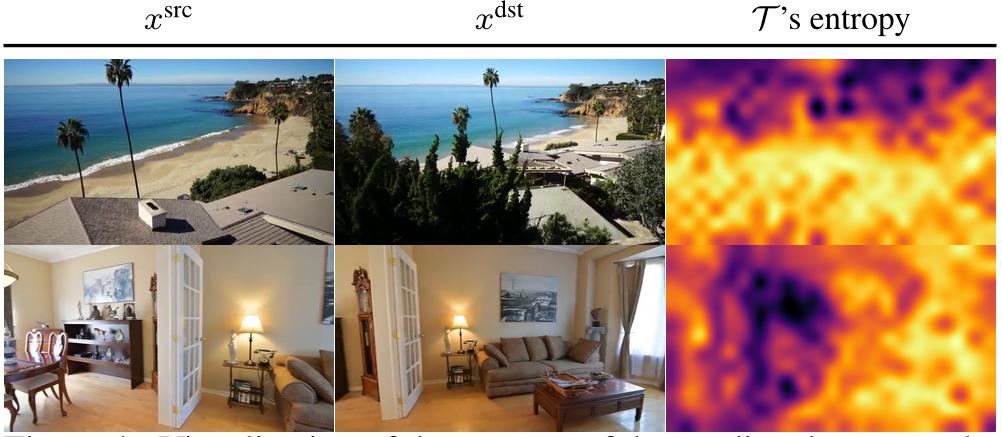

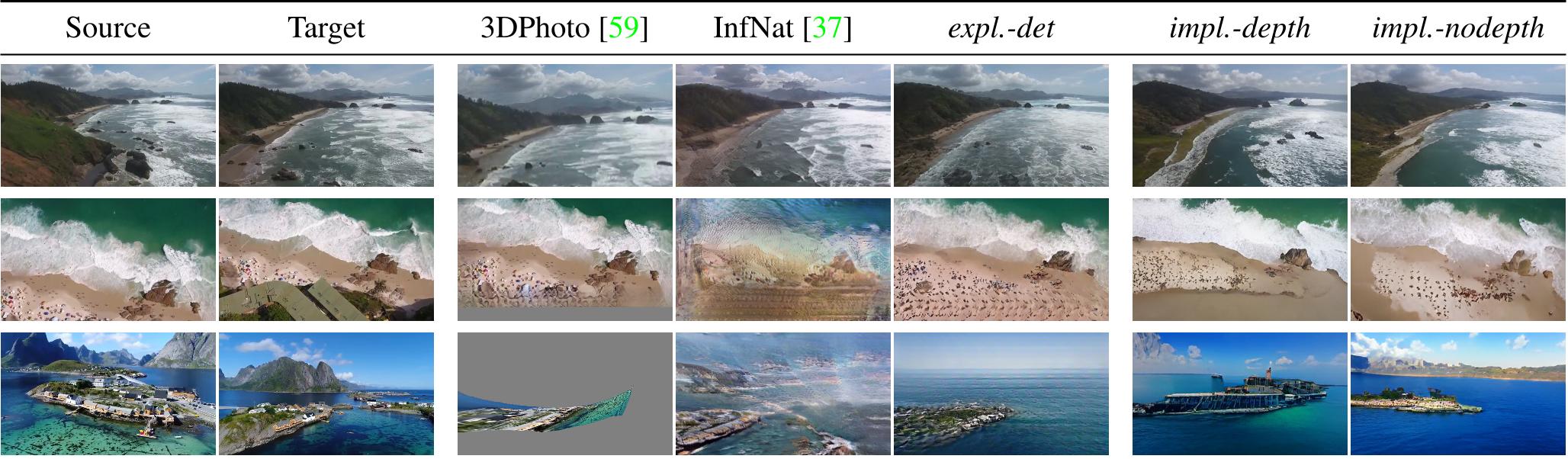

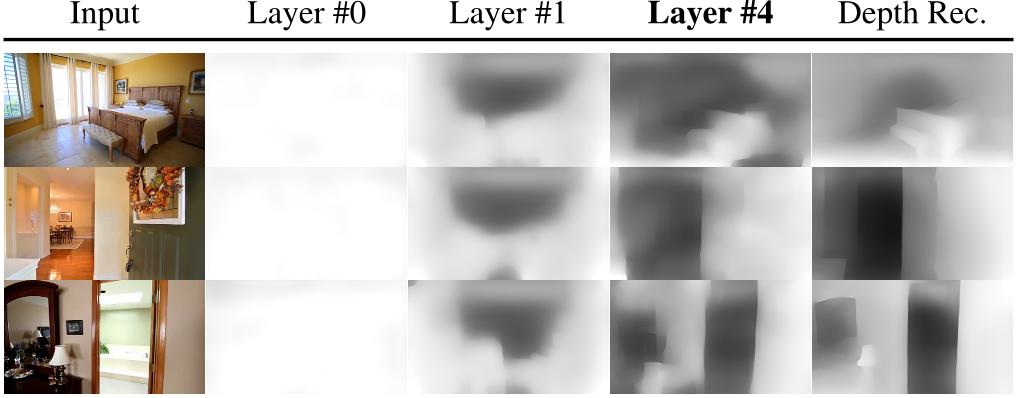

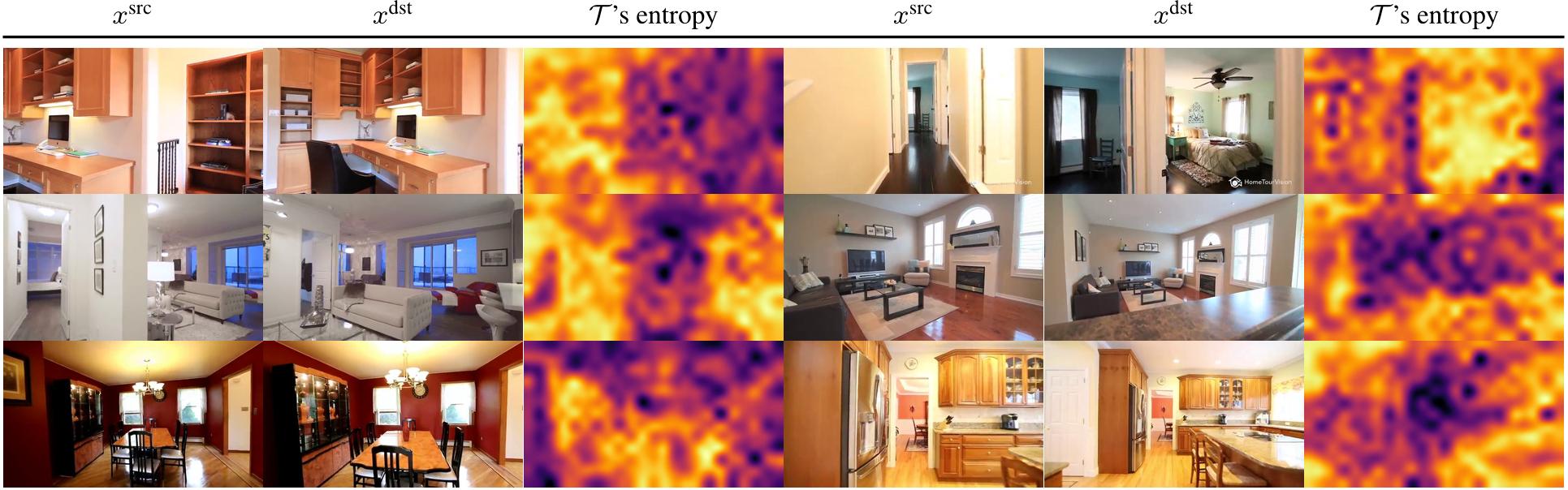

Figure 16. Additional visualizations of the entropy of the predicted target code distribution for impl.-nodepth. Increased confidence (darker colors) in regions which are visible in the source image indicate its ability to relate source and target geometrically, without 3D bias. See also Sec. 4.1 and Sec. D.

Figure 16. Additional visualizations of the entropy of the predicted target code distribution for impl.-nodepth. Increased confidence (darker colors) in regions which are visible in the source image indicate its ability to relate source and target geometrically, without 3D bias. See also Sec. 4.1 and Sec. D.