Abstract

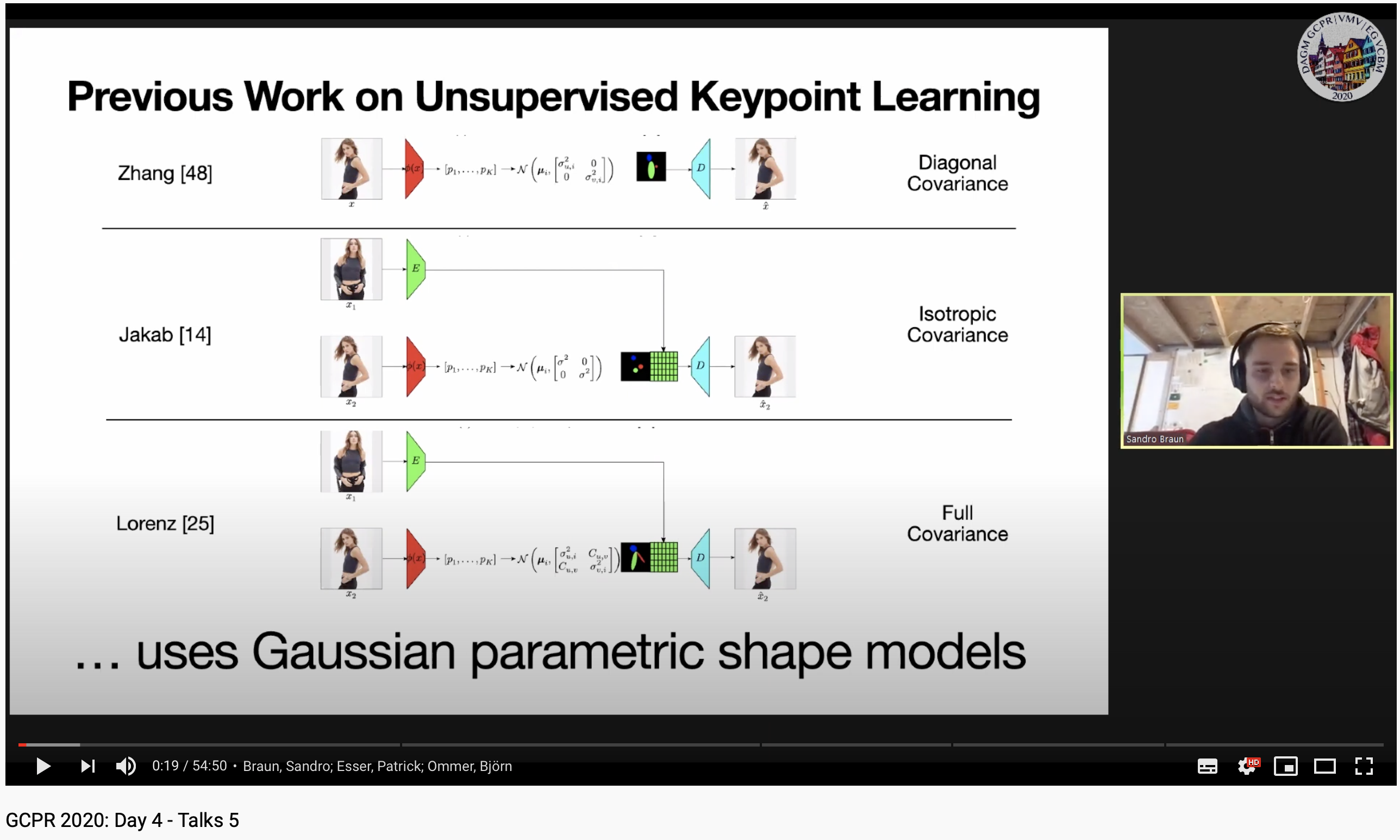

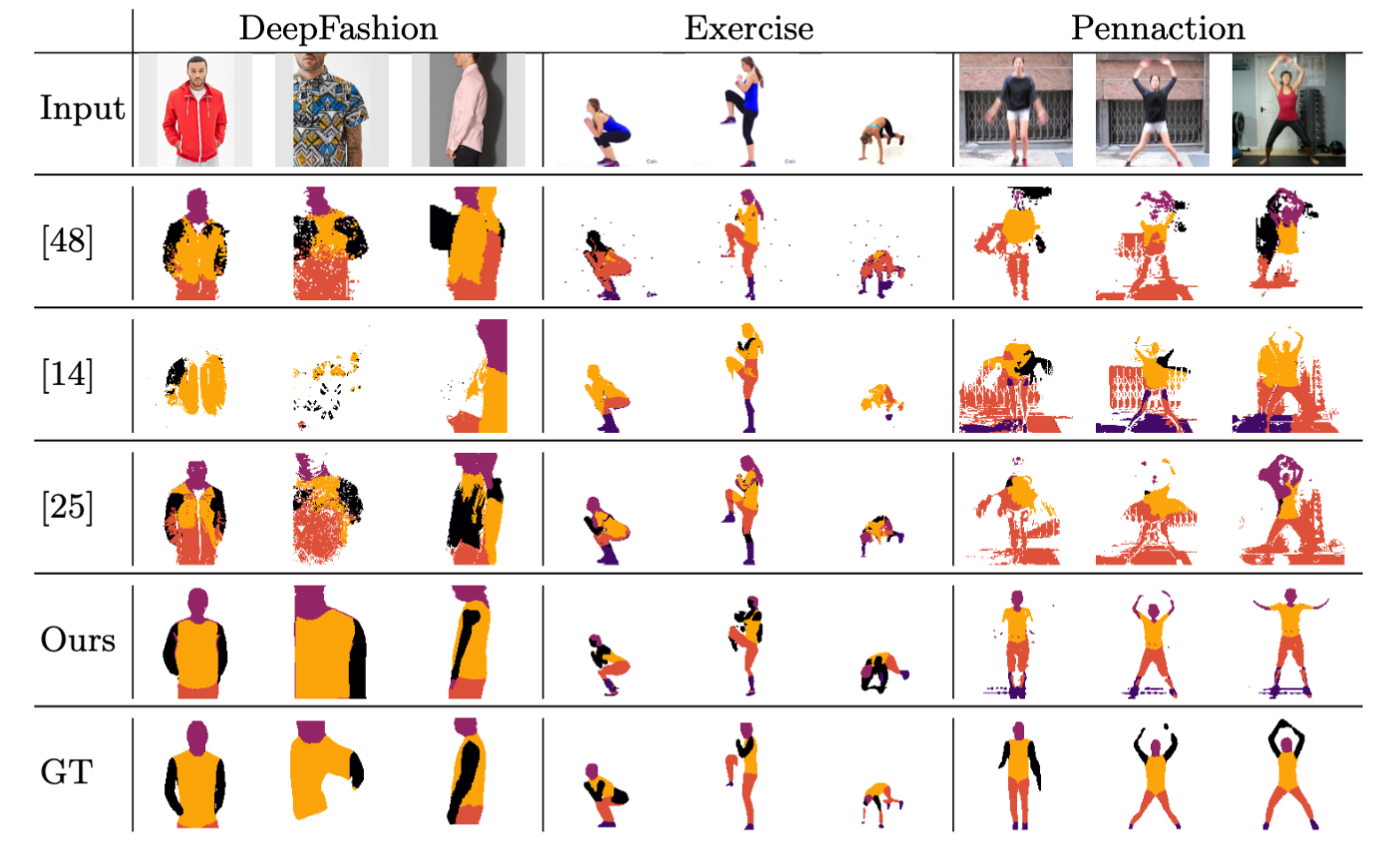

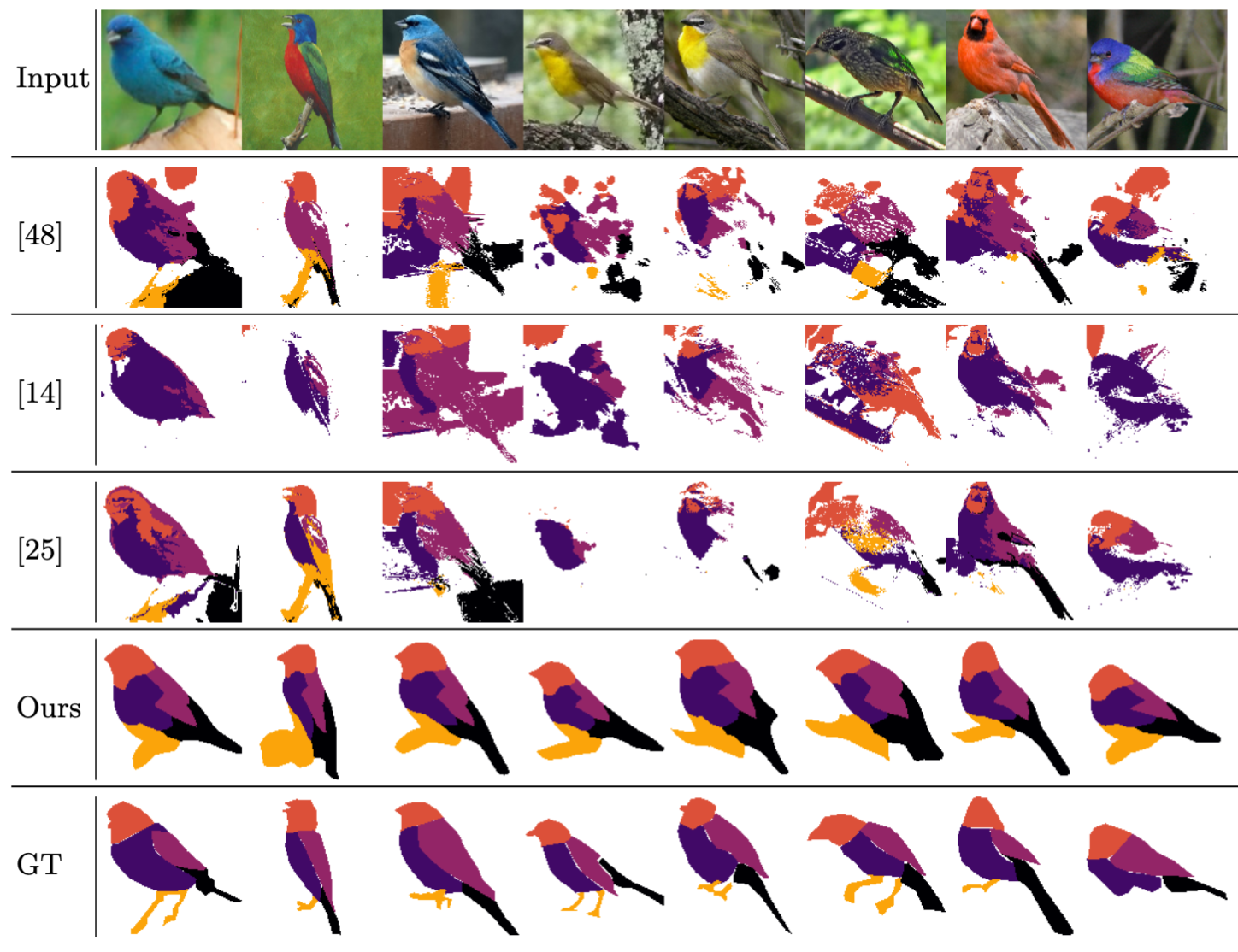

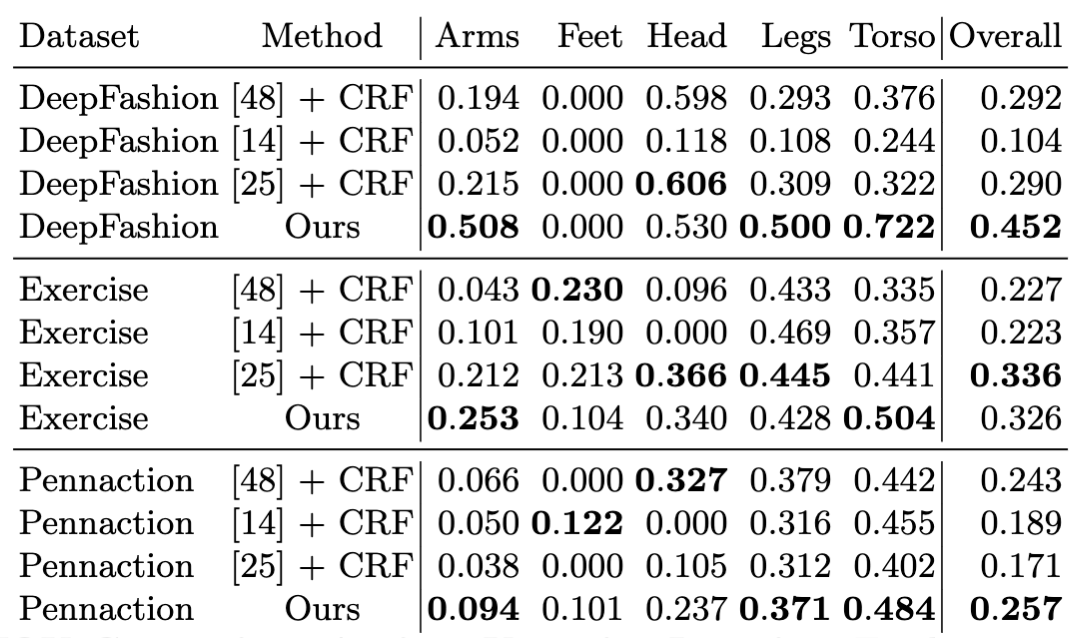

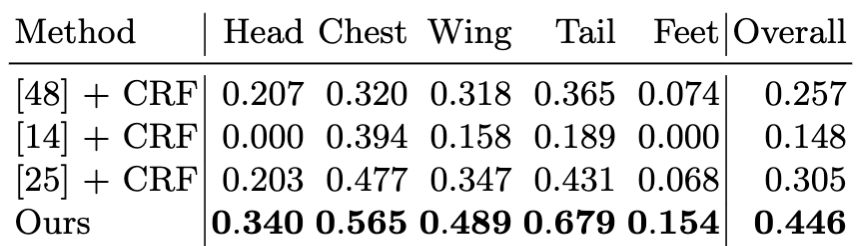

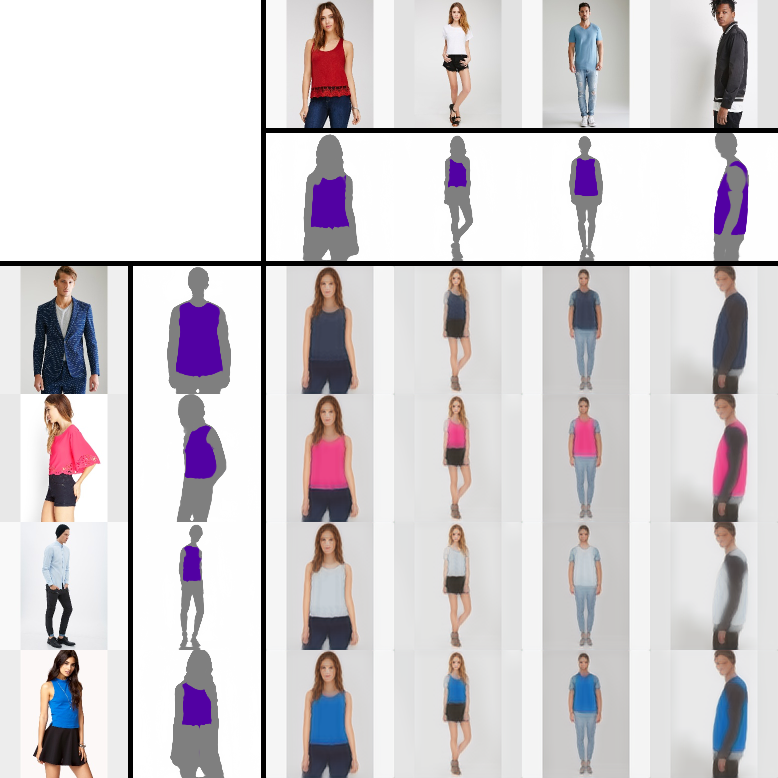

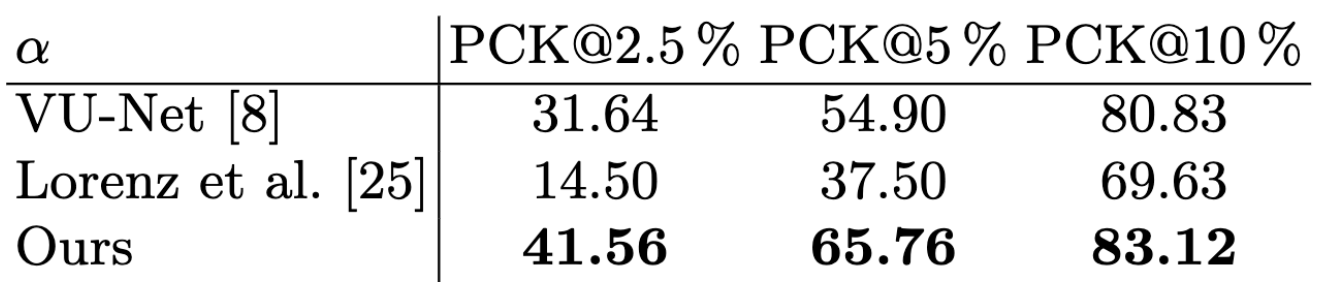

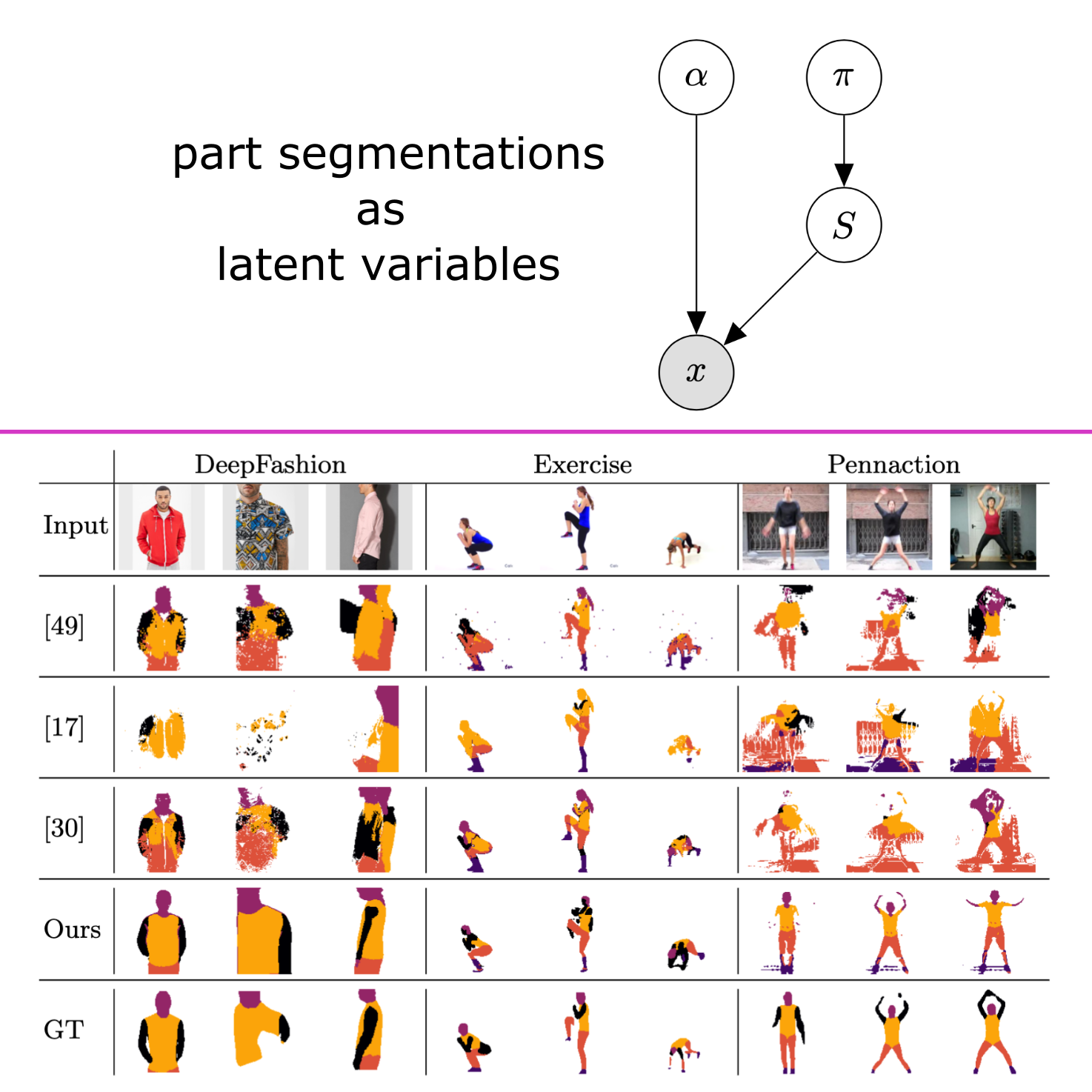

We address the problem of discovering part segmentations of articulated objects without supervision. In contrast to keypoints, part segmentations provide information about part localizations on the level of individual pixels. Capturing both locations and semantics, they are an attractive target for supervised learning approaches. However, large annotation costs limit the scalability of supervised algorithms to other object categories than humans. Unsupervised approaches potentially allow to use much more data at a lower cost. Most existing unsupervised approaches focus on learning abstract representations to be refined with supervision into the final representation. Our approach leverages a generative model consisting of two disentangled representations for an object's shape and appearance and a latent variable for the part segmentation. From a single image, the trained model infers a semantic part segmentation map. In experiments, we compare our approach to previous state-of-the-art approaches and observe significant gains in segmentation accuracy and shape consistency. Our work demonstrates the feasibility to discover semantic part segmentations without supervision.

Our part segmentation method leverages a disentangled representation for shape and appearance

to discover semantic part segmentations without supervision.

Our part segmentation method leverages a disentangled representation for shape and appearance

to discover semantic part segmentations without supervision.